Integration testing is an important phase of the software development lifecycle that validates whether individual software modules interact as expected. While unit tests make sure that the individual modules of software work as expected, integration tests make sure that these modules work harmoniously when integrated as a whole system.

As software systems grow in complexity, integration testing becomes increasingly important. The interactions between different components can become a major source of potential defects. Integration testing can help identify any interface defects between interacting software components and ensure that data flows correctly between them.

Without proper integration testing, the software might work in isolated units but fail when combined. This can lead to increased maintenance costs, customer dissatisfaction, and potential business losses.

But be warned, integration testing is not without its challenges. This is primarily because of the large number of possible interactions between the individual software components, the complexity of the underlying systems, and the potential for unforeseen emergent behaviors. Additionally, setting up the right environment for integration testing and ensuring that all components are available and are simulating real-world scenarios can be intricate and time-consuming.

In this article, you’ll learn about the common pitfalls developers encounter during integration testing in Java. For each of these pitfalls, you’ll learn about effective solutions to help you overcome them.

Pitfalls of Integration Testing in Java

As with any testing phase, integration testing comes with its own set of challenges. In the subsequent sections, you’ll learn about several common pitfalls you may encounter during integration testing, along with real-world examples. So, let’s jump right in.

Complex Dependencies

The management of complex dependencies in Java can pose significant challenges when doing integration testing. Java applications, especially those that are developed using comprehensive frameworks like Spring or Hibernate, often intertwine with a multitude of dependencies. These can range from other software modules to third-party libraries or even external systems, such as databases and web services.

The presence of these dependencies can introduce a layer of complexity that makes setting up, executing, and maintaining integration tests daunting. The issue is that each of these dependencies can have its own set of requirements, configurations, and states that need to be managed. This means you have to set up a controlled environment for integration testing. In essence, you’re not just testing your application; you’re testing how well it plays with a host of other components, each with its own quirks and configurations:

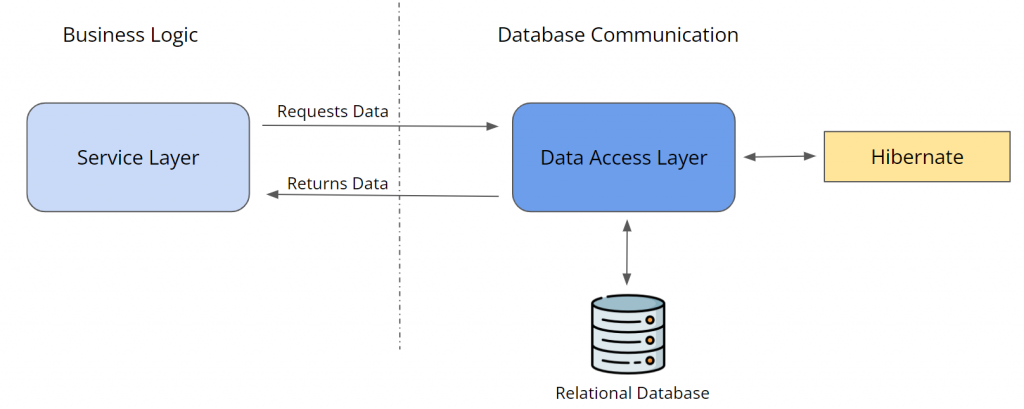

For instance, consider a scenario where a Java application was built using the Spring framework and heavily relies on a relational database to store and retrieve user profiles. This application has two main layers: a service layer containing business logic and a data access layer that communicates directly with the database.

When you’re performing integration testing to validate the interaction between these layers, you’re also implicitly testing the database and any third-party libraries that the data access layer uses. If the database isn’t set up with the exact expected test data, the service layer might not retrieve the expected user profile, causing the test to fail.

Additionally, the state of the database can change between test runs. For instance, if a test adds a new user profile, subsequent tests might fail due to duplicate entries. This isn’t just a matter of your code’s logic; it’s also about how well your code interacts with external dependencies under different conditions.

In the face of a challenge like this one, Testcontainers offers an effective solution. Testcontainers facilitates the creation of containerized environments for each dependency. This ensures that every test has its own isolated setup.

For the Java application in question, which heavily relies on a relational database, Testcontainers can instantiate a fresh, containerized version of that database for each test run. This ensures that the database starts in a known state and is populated with the necessary test data every single time. After the test concludes, this containerized database is discarded, which prevents any state changes from one test from impacting another.

The following code solves the problem of managing complex dependencies in Java by using Testcontainers to create and manage isolated, disposable containers for each dependency. This ensures that each test runs in a clean environment that is not affected by the state of other tests:

@Testcontainers

class UserServiceIntegrationTest {

@Container

static PostgreSQLContainer postgreSQLContainer = new PostgreSQLContainer("postgres:16-alpine")

// Set up your test data in this step. The execInContainer method has only been used for demonstration.

// You can use any other methods, such as Flyway migration or init scripts

@BeforeAll

static void setUp() throws SQLException {

postgreSQLContainer.execInContainer(wrapQuery("CREATE DATABASE test"));

postgreSQLContainer.execInContainer(wrapQuery("CREATE TABLE users (id INT PRIMARY KEY, name VARCHAR(255));"));

postgreSQLContainer.execInContainer(wrapQuery("INSERT INTO users (id, name) VALUES (1, 'John Doe');"));

}

@Test

void shouldRetrieveUserProfile() throws SQLException {

String jdbcUrl = postgreSQLContainer.getJdbcUrl();

String username = postgreSQLContainer.getUsername();

String password = postgreSQLContainer.getPassword();

Connection conn = DriverManager.getConnection(jdbcUrl, username, password);

ResultSet userProfile = conn.createStatement().executeQuery("SELECT * FROM users WHERE id = 1");

// Assert that the user profile is returned

Assertions.assertTrue(userProfile.next());

Assertions.assertEquals("John Doe", userProfile.getString("name"));

}

}

This code creates a PostgreSQLContainer instance for a PostgreSQL database. This container is started and stopped automatically before and after each test. This ensures that the database is always in a clean state.

Then the shouldRetrieveUserProfile() method creates a connection to the database. This connection can be used to execute queries and retrieve data. In this example, the test simply retrieves the user profile with the ID 1.

Testcontainers makes it possible to write integration tests that are isolated from each other and that do not rely on the state of external dependencies. This can help to improve the reliability and efficiency of your integration tests.

Longer Test Execution Times

Integration testing in Java involves testing the interactions between different units or components of a software application. Because it tests multiple components together, it often requires more time than unit tests.

For instance, imagine a Java application where there are multiple services interacting with each other and with a database. An integration test might involve setting up the database, populating the database with test data, starting up all the services, and then running tests to make sure they interact correctly. This is far more time-consuming than a unit test that would simply check if a single method returns the expected output for a given input.

As the test suite grows, the time it takes to run all the integration tests can become prohibitive. This can lead to developers running the tests less frequently, which, in turn, can result in bugs being detected later in the development process when they’re more costly and time-consuming to fix.

One approach to mitigate lengthy integration test times is to implement the Singleton Containers Pattern. This method involves reusing the same container instance across multiple tests, minimizing the overhead of starting and stopping containers for each test case. Implementing this approach can expedite the test execution process by reducing the setup and teardown durations.

Difficult Management and Maintenance of Test Data

Integration testing often requires a specific set of data to be present in the system to ensure that different components or modules of an application work together as expected. Managing this data can be challenging for several reasons:

- Data might need to be in a specific state before a test can run.

- Tests might modify the data and potentially affect other tests.

- Data consistency across different testing environments can be difficult.

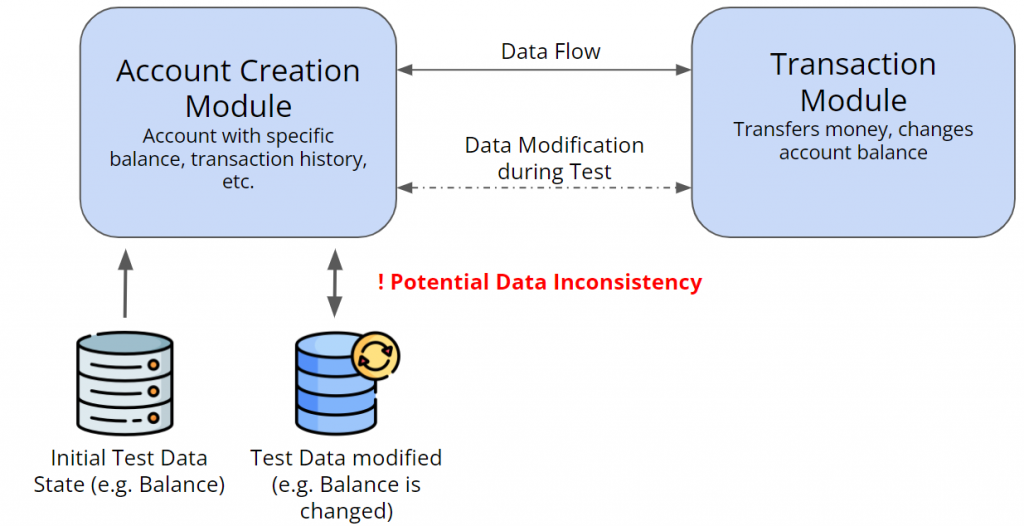

Imagine a banking application where you’re testing the integration of the account creation module with the transaction module. These modules need to work seamlessly together.

However, it gets tricky when data flows between them.

Before running any integration tests, the data must be in a specific initial state. In a banking application, this could mean having an account with a specific balance, transaction history, and other attributes. As tests run, the transaction module may modify this initial data state. For instance, if a test in the transaction module transfers money from one account to another, the account balances change. Subsequent tests expect the original balances will fail, not because there’s a bug in the application but because the test data has changed. These failures aren’t due to application bugs but are caused by altered test data.

Another layer of complexity is ensuring that this initial data state and any modifications are consistent across different testing environments. Inconsistencies here can lead to false positives, where tests pass despite an underlying issue. As a consequence, this can lead to false negatives (tests failing when there is not a real defect) or even false positives (tests passing when there is a defect). It can waste the time of the developer and tester and also reduce confidence in the testing process.

Testcontainers can be helpful when it comes to data management because it allows developers to instantiate containerized versions of databases for each individual test or suite. This approach ensures that every test class interacts with a fresh, isolated database, initialized to the desired state.

In this banking application scenario, this would mean that each test has access to an account with a specific balance, untouched by other tests. As a result, any modifications made during a test remain confined to that test’s environment.

The following code shows how Testcontainers allows you to quickly load test data before running tests:

@Testcontainers

class TestcontainersIntegrationTest {

@Container

private static MySQLContainer mysql = new MySQLContainer("mysql:8.1.0");

@BeforeEach

public void setupDatabase() {

// Clear the database and populate it with fresh test data before each test.

mysql.execInContainer(wrapQuery("DROP DATABASE IF EXISTS test"));

mysql.execInContainer(wrapQuery("CREATE DATABASE test"));

mysql.execInContainer(wrapQuery("USE test;"));

mysql.execInContainer(wrapQuery("CREATE TABLE users (id INT PRIMARY KEY, name VARCHAR(255));"));

mysql.execInContainer(wrapQuery("INSERT INTO users (id, name) VALUES (1, 'John Doe');"));

}

@Test

void testWithData() throws SQLException {

String jdbcUrl = mysql.getJdbcUrl();

String username = mysql.getUsername();

String password = mysql.getPassword();

Connection conn = DriverManager .getConnection(jdbcUrl, username, password);

ResultSet resultSet = conn.createStatement().executeQuery("SELECT * FROM users");

// Assert that the data is present

Assertions.assertTrue(resultSet.next());

Assertions.assertEquals(resultSet.getString("name"), "John Doe");

}

}

In this example, you create a MySQL container using the @Container annotation. This annotation tells Testcontainers to start a MySQL container before running the tests. Next, you populate the database with test data using the execInContainer() method. This method executes SQL statements in the container. Finally, you create a connection to the database and query it. You can be sure that all the data is present since you populated it yourself.

Additionally, Testcontainers lets you maintain sets of test data. This can be useful if you want to test different scenarios or if you want to make sure that your tests are always consistent. To do this, you can use the .withInitScript() method. The method takes a script file path as a parameter and runs the script from the file on the database after the container is initialized. This is how you would use it when defining the container:

@Container

private static MySQLContainer mysql =

new MySQLContainer("mysql:8.1.0")

.withInitScript("some-path/load-data.sql");

Bad Test Design

Integration tests help verify the interaction between different components or modules of an application. A test that is poorly designed can lead to a number of issues, including false positives/negatives, flaky tests, or tests that are hard to maintain. Bad test design often originates from not clearly defining what the test is supposed to test or not isolating the components that should be tested.

For example, suppose you’re testing the integration between a user registration service and an email notification service in a web application. Instead of mocking the email service or using a test email server, the test is designed to send real emails to a test account and then check that account to confirm receipt. This test design has several issues. First, it makes the test dependent on external factors like email delivery speed. This can introduce flakiness. Second, if the email service or the test email account has issues, the test will fail, even if the registration service is working. This can lead to false negatives and wasted time troubleshooting nonexistent issues in the registration service. The code for this bad test design could look like this:

public class BadIntegrationTest {

@Test

public void testUserRegistrationWithEmail() {

UserRegistrationService userRegistrationService = new UserRegistrationService();

EmailNotificationService emailNotificationService = new EmailNotificationService();

// Register the user and send a real email

boolean registrationResult = userRegistrationService.registerUser("test@example.com", emailNotificationService);

// Assume checkEmailAccount() checks the test email account for a new email

boolean emailReceived = checkEmailAccount("test@example.com");

assertTrue(registrationResult && emailReceived);

}

private boolean checkEmailAccount(String email) {

// Code to check the test email account for a new email

return true; // Assume email is received for this example

}

}

Here, the test sends a real email to a test account and then checks that account to confirm receipt. This example is problematic because it relies on an actual email service and a test email account, which makes the test susceptible to external factors like email delivery speed and potential issues with the email service or test account. This can lead to flaky tests and false negatives.

A solution to this problem would be mocking or stubbing to isolate the component under test. Instead of sending real emails, the developer can mock the email service to simulate its behavior. This way, the test only verifies the interaction between the user registration service and the mocked email service without any external dependencies.

When testing email sending logic, you can use Mockito like libraries. However, mocking the entire email sending logic could potentially overlook any bugs in it. A better alternative is to utilize tools such as GreenMail or MailHog with Testcontainers to verify the email sending logic. Alternatively, Testcontainers can be used to properly mock or isolate the components under test, as illustrated in the following example:

@SpringBootTest

@Testcontainers

public class GoodIntegrationTest {

@Container

public GenericContainer<?> emailServer = new GenericContainer<>("mailhog/mailhog:v1.0.1")

.withExposedPorts(25);

private static EmailNotificationService emailNotificationService;

@Autowired

private UserRegistrationService userRegistrationService;

@BeforeAll

public static void setUp() {

// Configure the email service to use the mock email server

emailNotificationService = new EmailNotificationService();

emailNotificationService.setSmtpHost(emailServer.getHost());

emailNotificationService.setSmtpPort(emailServer.getFirstMappedPort());

}

@Test

public void testUserRegistrationWithEmail() {

// Start a mock email server using Testcontainers

// Register the user and send an email to the mock server

boolean registrationResult = userRegistrationService.registerUser("test@example.com", emailNotificationService);

// Assume checkMockEmailServer() checks the mock email server for a new email

boolean emailReceived = checkMockEmailServer("test@example.com");

assertTrue(registrationResult && emailReceived);

}

}

private boolean checkMockEmailServer(String email) {

// Code to check the mock email server for a new email

return true; // Assume email is received for this example

}

}

This good example is more robust because it uses Testcontainers to run a mock email server that isolates the components under test. This eliminates dependencies on external services.

Limited Test Isolation

Test isolation ensures that each test is independent and does not rely on the state or outcome of other tests. In integration testing, limited test isolation occurs when tests are not fully independent. This can lead to the outcome of one test affecting the outcome of another test due to shared resources, shared state, or side effects from one test impacting subsequent tests.

For instance, consider an e-commerce application where the developer is testing the integration of the shopping cart module with the inventory module. One test adds a product to the cart and checks if the inventory decreases. Another test, running after the first one, checks if a product is available in inventory without considering the actions of the previous test.

The consequence of this is that if the first test decreases the inventory of a product to zero, the second test will fail, not because of an actual issue with the inventory check but because the previous test modified the state of the inventory. This leads to false negatives, where tests fail even though the functionality is correct.

Addressing test isolation is another pitfall where Testcontainers can help. Testcontainers lets you create containerized instances of application dependencies, which ensures that each test operates within its own isolated environment. For the previous e-commerce example, this means that every test can interact with a fresh, containerized version of the inventory database. Before the test begins, the database can be initialized to a specific state that ensures consistency and true isolation. Once the test concludes, the container is discarded, which makes sure no residual state affects subsequent tests.

To better demonstrate the underlying problem, consider the following code example of an integration test that demonstrates limited test isolation:

@SpringBootTest

class InventoryIntegrationTest {

@Test

void testAddProductToCartDecreasesInventory() {

// Add a product to the cart

shoppingCartService.addProductToCart("product-1");

// Check that the inventory of the product decreases

assertThat(inventoryService.getInventory("product-1")).isEqualTo(99);

}

@Test

void testProductAvailableInInventory() {

// Check that the product is available in inventory

assertThat(inventoryService.isProductAvailableInInventory("product-1")).isTrue();

}

}

In this code, the first test adds a product to the cart, which decreases the inventory of the product. The second test then checks if the product is still available in inventory, but it fails because the inventory has been decreased by the first test.

To resolve such issues, we can utilize Testcontainers. This ensures that each test has its own isolated environment and that the state of the inventory is not affected by the previous tests.

Here’s an example of a Testcontainers-based integration test that fixes the problem:

@SpringBootTest

@Testcontainers

class InventoryIntegrationTest {

//non-static - will spin up a new container for every test

@Container

PostgreSQLContainer<?> container = new PostgreSQLContainer<>("postgres:16-alpine");

@Test

void testAddProductToCartDecreasesInventory() {

// Add a product to the cart

shoppingCartService.addProductToCart("product-1");

// Check that the inventory of the product decreases

assertThat(inventoryService.getInventory("product-1")).isEqualTo(99);

}

@Test

void testProductAvailableInInventory() {

// Check that the product is available in inventory

assertThat(inventoryService.isProductAvailableInInventory("product-1")).isTrue();

}

}

}

The @Container annotation is used on a non-static field, which means that for each test method, a new PostgreSQL container is instantiated. This effectively solves the test isolation problem by ensuring that both tests have their own version of the inventory database, initialized to the required state. However, it’s worth noting that while this approach enhances test isolation, it can significantly increase the total execution time of the test suite due to the overhead of starting and stopping a container for each test. The trade-off between isolation and performance needs to be carefully considered when designing tests.

Conclusion

Integration testing is an important phase in the software development lifecycle. It ensures that the individual software modules work harmoniously when combined. However, the actual process of integration testing is complex, and you’ll face challenges that are difficult to overcome, including managing complex dependencies, dealing with longer test execution times, maintaining test data, designing effective tests, and ensuring proper test isolation. These pitfalls, if not addressed, can lead to unreliable test results, increased debugging time, and reduced confidence in the software’s overall functionality.

However, understanding these pitfalls is the first step toward avoiding them. By being aware of the potential challenges and actively seeking solutions, developers can ensure that their integration testing efforts are both effective and efficient.

Testcontainers has emerged as a valuable tool in addressing many of these challenges. By providing containerized environments for dependencies, Testcontainers offers a consistent and isolated testing environment, ensuring that tests are both repeatable and reliable. This not only reduces the time spent on setting up and tearing down test environments but also ensures that tests are truly independent, reducing the chances of false negatives and positives.

by Artem Oppermann

Artem is a research engineer with a focus on artificial intelligence and machine learning. He started his career during university as a freelance machine learning developer and consultant. After receiving his master’s degree in physics, he began working in the autonomous driving industry. Artem shares his passion and expertise in the field of AI by writing technical articles on the subject.